Dec 10, 2009

Network IPS Group Test Results Available

· IPS is a mature market. There is relatively little difference between products; thus, management and price are the key purchasing factors

· Best-of-breed products are more effective than those from strategic vendors who provide a wider range of products

· The market leader (from an installed base perspective) provides the best protection

· Organizations are protected as long as they keep their IPS systems updated

This group test set out to determine if these beliefs were correct. In order to garner the greatest participation, and allay any potential concerns of bias, we invited all leading vendors to submit their products at no cost. Every vendor below brought and configured their best Network IPS products; all were generally available (GA), no Beta or otherwise unavailable products were included. The following is a current list of the products that were tested, sorted alphabetically:

1. Cisco IPS 4260 Sensor, version 444.0

2. IBM Proventia Network IPS GX4004, version 29.100

3. IBM Proventia Network IPS GX6116, version 29.100

4. Juniper Networks IDP-250, version 5.0.110090831

5. Juniper Networks IDP-600c, version 5.0.110090831

6. Juniper Networks IDP-800, version 5.0.110090831

7. McAfee M-1250, signature version 5.4.5.23

8. McAfee M-8000, signature version 4.1.59.23

9. Sourcefire 3D 4500, rules version 4.8.2.1

10. Stonesoft StoneGate IPS 1030, 5.0.1 build 5053 update package 261

11. Stonesoft StoneGate IPS 1060, 5.0.1 build 5053 update package 261

12. Stonesoft StoneGate IPS 6105, 5.0.1 build 5053 update package 261

13. TippingPoint (TP) 10 IPS, DV 2.5.2.7834

14. TippingPoint 660N IPS, DV 2.5.2.7834

15. TippingPoint 2500N IPS, DV 2.5.2.7834

Some vendors provided multiple test units of varying performance levels. Across the board, all vendors claimed identical protection across models.

The results will be shocking to most and have already generated plenty of buzz. 1,159 live exploits is the most ever tested. If you currently operate one of these products, or are considering investing in an IPS, the information in this exclusive test report is invaluable.

Dec 8, 2009

TippingPoint Tests

In August 2009, NSS Labs performed an independent test of the TippingPoint 10 product and determined it only blocked 39% of common exploits. Subsequently, TP came to our lab for private testing for further assistance, as they stated. TP customers can see a spike of hundreds of filters which appeared in October and November.

In early December, NSS Labs released its independent IPS group test of 15 different IPS products submitted by 7 vendors, including TP. The product improved marginally, but is rated ‘caution’ due to its subpar protection on our tests. Now TippingPoint has publicly complained in this tippingpointblog that the test must be inaccurate because it didn’t correspond with the results of their private testing with NSS, with 'customer experience', nor with their internal testing.

3 response points:

1st. These modern IPS products are so complex, that customers will rarely be in position to question or test a vendor properly. And they rarely do when it is a brand name. Very few enterprises have the sophisticated testing tools, expertise and access to exploits like the vendors and a professional security testing lab like NSS. So, having a lot of customers does not necessarily mean they are aware of the true protection they are receiving. In fact, not knowing is a liability in itself for all involved.

2nd. RE: Private testing results. At NSS we don’t use the same attack set in our private testing, as we do in public testing. That would be like getting a copy of the test and answers beforehand, and would give private clients an unfair advantage over other vendors. We do test the same vulnerabilities, but the specific exploits we use vary. This should underscore the integrity of NSS Labs testing principles and procedures. In general, differences in results could be attributable to signatures written too narrowly; e.g. for specific exploits vs vulnerabilities, or to signatures written for a test lab environment.

3rd. We certainly cannot account for any vendor's internal testing procedures. However, the findings of our two previous tests were ultimately corroborated.

As far as delaying the Network IPS Group Test Report. It would be unfair to enterprise readers all around not to disclose validated testing results that could help them mitigate threats that might not be stopped by their defenses as they expect (that could be considered irresponsible non-disclosure). A delay would also not be fair to the other 6 vendors who also participated. As with the previous tests, NSS took great care to validate the results. Deciding to act positively upon them to deliver the better customer protection is the next imperative.

Dec 7, 2009

Maintaining Test Integrity during Private Testing

· The same range of vulnerabilities are represented in both the private and public tests. However, different exploit variances are used between the two types of test to ensure vendors are writing vulnerability-based signatures in order to adequately protect their customers, and not simply writing exploit-specific signatures to perform well in testing. For example, private tests utilize a higher number of Proof Of Concept (POC) exploits and PCAPs, whereas public testing and certification relies exclusively on NSS’ unique and comprehensive live exploit test harness.

· Vendors who write vulnerability based signatures rather than exploit specific ones will achieve similar results in both private and public tests

· Vendors that write signatures to catch POC PCAPs, but not real exploits and variants, may experience different test results between private and public tests.

Dec 6, 2009

Raising the Bar in Testing

It is important for NSS Labs to periodically raise the bar as the industry advances, both offensively and defensively. In other words, we increase the difficulty of the test to match the needs (as driven by cyber criminals' innovative new attacks), and the capabilities of vendors who have developed new approaches to countering the threats.

This is what NSS Labs has been doing over the past 2 years in particular: raising the bar. As such, our reports and the scores vendors receive on them deserve to be put into context. We perform a number of different types of tests over the past few years:

1. Product Certification - a full review of the whole product; including security effectiveness, performance, manageability and stability.

2. Group Tests - comparative testing of a class of products from leading vendors. Due to the volume of vendors, the depth of the testing may be abbreviated and focused. The browser security, endpoint protection, and Network IPS tests are great examples of these.

3. Security Update Monitor (SUM) Tests - unique to the industry, NSS Labs has been testing IPS products on a monthly basis. Every quarter, we tally the average scores and bestow awards. The attacks in this test set are focused on vulnerability disclosures made the previous month. As such, the volume of new additions is generally in the couple dozen range. Overall, there are currently 300 entries.

4. Individual Product Reports - these are brand new detailed reports that were created during group testing. They capture the nitty gritty details that are rolled up into the group test.

5. Exposure Reports - An industry and NSS first. These reports list specific vulnerabilities that are NOT shielded by an IPS. This information is critical in helping organizations knowing where their protection is and is not. Contact us for confidential demonstration. (Knowing can help identify appropriate mitigations, such as writing custom signatures, implementing new rules, resegmenting the network, or ultimately switching or adding a security product.)

By the Numbers:

Exploit selection and validation is a serious matter. Our engineers take care to identify the greatest risks to enterprises, based on commonly deployed systems, utilizing a modified CVSS.

Certifications performed in 2008 and 2009 tested 600 vulnerabilities. Compare this with other tests, e.g. ICSA IPS tests only 120 vulnerabilities (all server side). Our 600 is more than 480 better, because vendors did not know what they were, thus preventing the 'gaming of the test'.

SUM testing generally adds 20-30 vulnerabilities per month. Relatively small, directly reflective of popular attacks.

Our latest group test utilized 1,159 live exploits. More than 2x the number we used previously for certifications (and nearly 10x more than the next lab).

NSS Labs Tests are Harder Than The Other Guys'...

Difficult, real-world tests are an important part of raising the bar. Marketers like to have big numbers, and when it comes to scores, 100% is the target. Unfortunately, that's not the reality of information security products. We at NSS do not expect any product to catch 100% of the attacks in any of our tests. If they do, we probably are not working hard enough (or the bad guys gave up and went home - unlikely). There are more threats than can be protected against, and depending on the vendor, the margin can be acceptable, or pretty significant.

Take the difficulty of the test into consideration when comparing products. The lower the bar, the easier it is to score 100%. And the less meaningful it is. A 70% score on an NSS Labs Group Test (1,159 exploits) is still 6.7 times more validation than a 100% of 120 exploits in an ICSA Labs(r) test. And a 95% score on an NSS certification is 4.9 times more.

When we at NSS Labs raised the bar on this Q4 2009 IPS Group Test, we really cranked it up. So, if you're wondering why a vendor who previously scored in the 90's is scoring lower on the group test, it's not necessarily because they are slacking off. In most cases it is quite the opposite. Thus, one should be sure to compare products within the test set and methodology. Ergo, the 80% product definitely bested the 17% product by a wide berth. Be very cautious of the latter. And reward those vendors who submitted to this rigorous testing in the first place.

Dec 4, 2009

NSS Labs Mission Revisited

For enterprises, that means helping them choose and implement better defenses. We do this by performing rigorous testing of leading products in various configurations and publishing test reports for purchase as an information service, much like other analyst firms, like Gartner, IDC, Forrester, etc. (However, we are the only ones that actually perform hands on, comprehensive testing of security products). There are several types of reports, individual certifications (full 360 reviews), comparative group tests, Security Update Monitoring, and our new Exposure Reports. The products in this information service help subscribers understand what is protected and what is not. Nothing protects 100%, so knowing the specifics is important.

For security product vendors, this means testing them against standardized evaluation criteria to establish a baseline, and drawing attention to key issues and requirements. We then test according to best practices methodologies. Our reports also reward those vendors that perform well, and they can use those for marketing. When it comes to improving products, vendors have great resources, and some of the smartest teams around. In addition, they often turn to outside experts for help. NSS is well equipped to assist with this type of private testing and consulting. However, we are always careful to maintain integrity during the process.

Nov 6, 2009

CISOs - the Wild List isn't

The Wild List:

- contains a couple hundred virus samples (922 in August 2009 to be exact)

- contains only viruses, except a couple worms (Koobface and Confiker - and dozens of variants of the same), only added a couple months ago. There are no rootkits, trojans, downloaders, or spyware! Note: Trojans and downloaders are arguably the most prevalent initial infectors (exploits are another story)

- contains viruses that have been agreed upon by at least TWO antivirus researchers, who in almost all cases work for AV companies

- is generally 2-3 months behind emerging threats by the time folks agree

Now, this was a good idea back when there were a hundred viruses a month. But, the volume and complexity has outpaced the organization's ability to keep up, and has become less relevant.

In our opinion, the Wild List is NOT representative of threats on the Internet, and it is extremely biased based on sharing and narrow definition of scope. Should you be basing your purchasing decisions off of certifications that use it? (ICSA Labs, VB100, West Coast Labs)

Oct 8, 2009

Evading Anti-malware Products

Why? Because the bad guys are smart and aggressive. And remember, cybercriminals need only find one open door to get in, whereas defenders need to protect all the doors.

Cybercriminals are employing a plethora of techniques in a highly automated fashion to evade detection. Gunter Ollman and the Damballa team have written a nice paper explaining malware evasion techniques. These automated methods allow bad guys to create massive amounts of unique malware that can circumvent AV software. Popular techniques include using:

1. Crypters

2. Protectors

3. Packers

4. Binders

5. Quality Assurance

See the well-written paper for a more complete discussion. This is why AV products are having to evolve, and quickly.

Oct 5, 2009

Awareness Month

Both are major problems for our society. One is a condition when cells replicate uncontrollably, the other a premeditated malicious digital attack. In 2009, there are 193,000 new breast cancer cases expected. My mother is a breast cancer survivor, thanks to early detection, great doctors and divine will. And we all likely know someone who is a cyber-security attack survivor: after all, there are 339 million victims of data loss and breaches (see: Data Loss DB and Privacyrights Clearinghouse).

When it comes to breast cancer, early detection is the key; there are even earlier technologies than the mammogram. But, what's the corollary for cybersecurity? Testing of course! Testing of our knowledge of threats and best practices. And testing of our defenses: whether individual products, or layered defense architectures and policies.

Unfortunately, there is far too little testing going on. Erecting defenses and not periodically evaluating their effectiveness is a far too common practice. Requirements of certain compliance regimes like PCI DSS are helping drive awareness and require at least some level of testing. However, there seems to be a common perception that you can 'set it and forget it.' For technologies like IPS and anti-malware that require constant updating, nothing can be further from the truth.

Sep 21, 2009

Are anti-malware products a commodity?

It is perhaps understandable how one might believe this given all the marketing and the sheer difficulty in empirically discerning otherwise (but not really for an analyst). Much of the testing shows scores between 98 and 99%. And other long-standing organizations have essentially declared as much through their certifications. Dozens of products have achieved the Virus Bulletin VB100%(tm) award, and still others tout the Westcoast Labs Checkmark(tm) certification as a moniker of distinction. And ICSA Labs has certified 52 antivirus products to be up to snuff. So they must all be great, right?

Wrong. This is where real-world independent testing comes in that actually measures meaningful differences, like proactive protection (keeping malware off the machine), time to add protection, and protection over an extended period of time. In our recent Group Test of corporate and consumer endpoint protection products using our Live Testing methodology, we found a dramatic stratification of products' abilities to stop socially engineered malware (the kind that tricks users into clicking 'download and run'), currently the largest infection vector. Here are some key findings from the consumer report:

- Proactive 0-hour protection ranged from 26% to 70%

- Overall protection varied between 67% and 96% (over the course of 17 days of 24x7 testing)

Since we performed these tests on our own, without any vendor funding, we are selling the group test of corporate endpoint protection products. See all the anti-malware product reports.

Which products we tested:

- AVG Internet Security, version 8.5.364

- Eset Smart Security 4, version 4.0.437

- F-Secure Client Security version 8.01

- Kaspersky Internet Security 2010, version 9.0.0.459

- McAfee VirusScan Enterprise:8.7.0 + McAfee Site Advisor Enterprise:2.0.0

- Norman Endpoint protection for Small Business and Enterprise

- Sophos Endpoint Protection for Enterprise - Anti-Virus version 7.6.8

- Symantec Endpoint Protection (for Enterprise), version 11

- Panda Internet Security 2009, version 14.00.00

- Trend Micro Office Scan Enterprise, version 10

Sep 5, 2009

What % of threats do you expect your anti-malware product to stop?

7 simple questions here:

http://www.surveymonkey.com/s.aspx?sm=oiGBnkYL3i_2bBTEE4P24QNA_3d_3d

Thanks for your help

Aug 13, 2009

Q3 2009 Browser Security Tests Published

A key take away is that while the other browsers maintained or decreased protection between the two tests, Internet Explorer continued to improve its protection against cybercriminals.

Socially engineered malware is the most common and impactful threat on the Internet today, with browser protection averaging between 1% and 81%. Internet Explorer 8 caught 81% of the socially engineered malware sites over time, leading other browsers by a 54% margin. Safari 4 and Firefox 3 caught 21% and 27% respectively, while Chrome 2 blocked 7% and Opera 10 Beta blocked 1%.

Phishing protection over time varied greatly between 2% and 83% among the browsers. Statistically, Internet Explorer 8 at 83% and Firefox 3 at 80% had a two-way tie for first, given the margin of error of 3.6%. Opera 10 Beta, exhibited more extreme variances during testing and averaged 54% protection. Chrome 2 consistently blocked 26% of phishing sites, and Safari 4 offered just 2% overall protection. Firefox 3.5 crashing issues prevented it from being tested reliably.

The full text and analysis of these and other reports on browser security can be found at http://nsslabs.com/browser-security.

NSS Labs live testing methodology represents an accurate, real-world testing that can be performed on information security products.

- Newly discovered malicious phishing and malware sites were added to the test, which repeated every four hours 24x7 for a minimum of 12 days

- All five browsers tested URLs simultaneously

- All sites were validated before, during and after via multiple methods

Aug 3, 2009

Google Drives Security Topics in the Media

The answer included the expected: they rely on contacts, relationships, identifying trends and major news. But, almost all of the agreed on this: Google influences the news. Google traffic, page views, etc. Editors are business people too. And the more viewers the more the property is worth to advertisers. Thus, when Paris Hilton's cell phone gets hacked, or another star's twitter or facebook account are compromised, this counts as top news. People want to read it.

Similarly, the panel agreed there was a focus on the 'bad news'; the discovery of a vulnerability or exploit against a popular service or product. It was difficult for journalists to cover the solutions or positive trends as this would come close to promoting products, it was argued.

Jun 25, 2009

Endpoint Protection Group Test Started

Both consumer and corporate products are being evaluated. Stay tuned for more information or contact me with any questions (rmoy).

May 19, 2009

Two acquisitions in two weeks!

Solidcore Systems, which makes memory firewall/application white listing products, was acquired by McAfee. The #2 antimalware vendor cum security vendor has added whitelisting to its billion dollar portfolio of antimalware, vulnerability and intrusion prevention products. In Q3 of 2008, NSS Labs had evaluated and certified the S3 Control Embedded product as NSS Approved for Host Malware Protection.

In a down economy, strong vendors go shopping for technologies to round out their product lines so they're in positions of strength when the buyers recover. Note, even with all the cost cutting and layoffs, there's always money left for strategic purposes. And if you're a CEO who is going to make a purchase in this economy, there's not much room for forgiveness. So, you can bet they did their homework on all sides: technology, sales execution, management, margins, balance sheet, etc. I'm pleased NSS Labs was able to help these young companies grow their businesses and wish them well in the next stages of their evolution.

May 15, 2009

NSS Awards First Gold in 5 Years

Yes, it's true. After a long 5 years of waiting for the next great product, at RSA Conference 2009 this year, we bestowed the prestigious NSS Labs Gold Award to IBM/ISS for it's Proventia Network IPS GX6116. IBM's was the first IPS to pass our new requirements for Gold, including the monthly recurring Security Update Monitor (SUM) program testing.

The GX6116 scored an average of 98.6% over the 3 consecutive months of testing. This new recurring testing program ensures that vendors are keeping up with current threat protection levels as advertised. Each month our engineers add new attacks to the test set according to our modified CVSS ranking of enterprise-relevant vulnerabilities. Unlike other tests, the vendors do not know which exploits will be used in this blind test. So 98.6% is pretty impressive. Most other products don't do nearly as well.

Also to be commended is the 8Gbps of real-world throughput achieved by the GX. Certainly, the IBM team worked hard and should be proud of their accomplishments on this rigorous test program. Here is Brian Truskowski, General Manager of IBM/ISS, accepting the NSS Gold Award; and his team: Dan Holden, John Pirc, Eric York, Greg Adams.

IBM isn't the only participant in the program. You can look forward to monthly testing from McAfee as well (coming soon).

Mar 31, 2009

Live Testing, web malware and assumptions...

Interestingly we are hearing from two different camps. A few bloggers/journalists are finding their assumptions challenged about their favorite programs; "how can that be?" Meanwhile, 'hard core' security researchers are telling us they are glad to see more comprehensive empirical validation of some of their own data points. Regardless of whether your assumptions were validated or challenged, the data can now drive the conversation - and future research.

Mar 29, 2009

CBS News covers Socially Engineered Malware

Mar 19, 2009

web browser security study - socially engineered malware

Read the full report here: http://nsslabs.com/anti-malware/browser-security

Also notable, this was the industry’s first live test of fresh malware sites. We pulled thousands of URLs off the web in real-time and fed them into 6 different browsers (84 unique machines) every 2 hours. A lot of work went into building this test harness and you'll certainly be hearing more about it shortly. Also keep in mind, while the highest score was Microsoft at 69%, this is nothing to sneeze at. All of the sites were extremely fresh, and the time between detection on the web and testing in the harness was between 30 minutes and 2 hours. Compare this to a VB100, ICSA, West Coast or other wild-list type test where the malware is generally 2+ months old. Our new Live Testing model yields a much more real-world assessment of anti-malware detection rates.

As far as the results, we were pleasantly surprised at just how well IE8 did. Browsers, and IE8 in particular, are becoming a viable extra layer of security on top of anti-malware/endpoint protection.

Note: NSS Labs developed the test methodology and infrastructure independently. Microsoft provided funding.

Jan 23, 2009

First 10Gbps IPS certification: McAfee M-8000 receives NSS Labs Approved

NSS Labs just released the first 10Gbps IPS certification as part of our 10Gbps IPS group test. A number of vendors are offering 10Gbps appliances: Juniper, McAfee, Enterasys, Force10, Sourcefire. McAfee's M-8000 was the first to pass our extensive testing and receive certification. In addition to meeting the rigorous performance requirements, the product scored exceptionally well on the security effectiveness testing. Read the full report here.

Still other vendors are taking the solution approach by including a load balancer and multiple IPS devices. It should be noted, these could use any reasonable switching approach to stack/VLAN multiple physical IPS devices into one logical unit. Think of products from the likes of: IBM, Cisco, Crossbeam (Chassis/Blade), Sourcefire, TippingPoint, TopLayer, etc. Depending on what a company already has installed, and what their growth/infrstructure plans look like, this model could also work well. It will come down to a TCO and effectiveness decision.

It should be noted that this was an award that was a long time in the making since we announced the testing in the summer of 2008; and many vendors had announced products well before that. Indeed there are many reasons why it takes so long. #1 - It's hard to get right. It is not necessarily easy for a vendor that has a 'successful' 1Gbps IPS to deliver the same quality product that truly meets 10Gbps requirements. We just held a technical webinar on the topic of 10Gbps IPS. We covered the challenges that vendors face when making a 10 Gbps IPS, as well as those faced by buyers who are evaluating these products. The webinar is recorded and available here. I was pleasantly surprised to receive several comments that this was the "best webinar ever," and very informative. If you don't have time to listen to the webinar, you can probably at least peruse the slides.

As we've seen in our testing, there are plenty of gotchas to look out for. And for this large and complex of a purchase, most of the potential buyers do NOT have the capabilities to adequately evaluate and test such a product. In such cases it should really behoove the vendors who have done a good job to have their products validated by a competent 3rd party. So be sure to ask your vendor what kind of testing and certification the product has gone through. (OK, somewhat of a trick question: I must confess I don't know of any other lab capable of doing the level of testing that we do, either in terms of throughput or security ;-)

/rick

Jan 20, 2009

The value of "reviews" just went down another notch

Belkin is today's unfortunate poster child of dishonest marketing, the euphemistic "putting lipstick on a pig".

When I began my career in IT, a while ago, I relied on user reviews to provide me with some guidance. Which products were better than others, more reliable, faster, etc. The world of user-based reviews has slid a long way. Apparently a sales rep at Belkin had been hiring people on the internet to flag negative reviews of his products as "unhelpful" and post positive ones. There are plenty of other journalists and bloggers lambasting the guy, and the company president for denying and then brushing over the transgression. Amazingly, the employee still has his job. PC World covers the pandemic further here.

Folks: This is why a trusted independent 3rd party

is so important when it comes to getting good advice about products. The financial motivations for individuals with a sales quota and a boss to please, or companies with investors to show returns for can be tempted to cross the line. "users" can be anyone, write anything, and have almost absolute anonymity, and no accountability. Reviews can be written in such a way that they are generic enough to apply to any product, allowing them to spam such services that host reviews. This "review SPAM" (can we coin RSPAM now?) can appear on any magazine site, or portal, regardless of how trusted the mother brand may be.

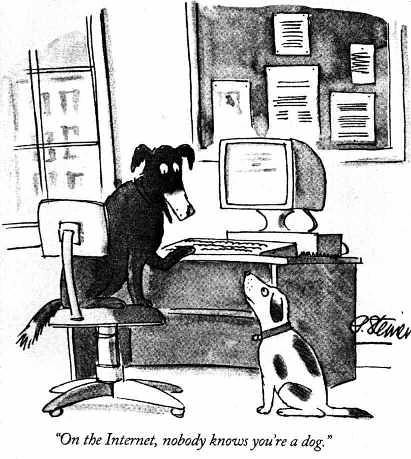

is so important when it comes to getting good advice about products. The financial motivations for individuals with a sales quota and a boss to please, or companies with investors to show returns for can be tempted to cross the line. "users" can be anyone, write anything, and have almost absolute anonymity, and no accountability. Reviews can be written in such a way that they are generic enough to apply to any product, allowing them to spam such services that host reviews. This "review SPAM" (can we coin RSPAM now?) can appear on any magazine site, or portal, regardless of how trusted the mother brand may be.To reach back to a 1990's cartoon that has new meaning here, on the internet, you just don't know which reviews are dogs.

Jan 7, 2009

Webinar: 10Gbps Intrusion Prevention

You can also look forward to some real experiences culled from our 10Gbps IPS group test. The first results forthcoming end of January 09 and the full report end of Q1.